In the age of intelligent systems, simply having access to AI isn’t enough — the real game-changer is building a custom automation playground that puts you in full control. Whether you’re streamlining tasks, building interactive agents, or integrating data-driven decisions into your operations, combining powerful tools like N8N, PostgreSQL, and OpenAI unlocks limitless possibilities.

This comprehensive guide will show you exactly how to install N8N AI Agent, integrate it with a PostgreSQL database, and launch an autonomous automation stack using Docker — no cloud lock-in, no third-party dependencies. You’ll create a fully private, self-hosted setup that can:

- Run advanced workflows using a visual editor

- Connect to local or cloud-based language models like OpenAI or Ollama

- Store contextual memory with PostgreSQL

- Handle real-time API events and triggers

From developers building AI agents to automation engineers and hobbyists experimenting with intelligent workflows — this setup is designed to scale with your ambitions.

Ready to install N8N AI Agent and design your own intelligent automation system? Let’s dive in and build it, from the ground up — step by step, fully documented, and 100% in your control.

Stack Overview

To build a reliable and intelligent automation system, you need more than just a chatbot—you need a robust stack that blends orchestration, intelligence, and persistence. That’s where this setup shines.

At the heart of the system is N8N, a powerful open-source workflow engine that lets you visually build and automate complex flows with zero code. It acts as the central brain, managing triggers, tasks, and logic between services and APIs.

On top of that, we add an AI Agent Playground—a modular layer where language models like OpenAI or Ollama can respond intelligently to prompts, external input, or internal events. This playground serves as your experimentation ground for testing LLM-powered behaviors, chains, and decision-making.

To store memory, state, logs, and context, we integrate PostgreSQL. This persistent database allows your agents to remember conversations, record results, and maintain continuity across sessions.

The result? A flexible, Docker-based AI automation architecture that can run securely on your local machine or scale up on cloud infrastructure—with optional features like SSL, domain integration, and API authentication.

Here’s a breakdown of what you’ll be setting up:

- ? N8N: Open-source workflow automation tool (Dockerized)

- ? AI Agent Playground: A modular AI assistant using prompt chains

- ?️ Database: PostgreSQL (or SQLite as fallback) for state and memory

- ? Authentication: Basic user auth or token access

- ? Optional: Nginx + SSL with Cloudflare

All components will run locally or on a VPS using Docker Compose.

Preparation

Before we dive into the hands-on setup, let’s take a moment to prepare the foundation. A solid environment ensures that the entire AI agent stack runs smoothly, whether you’re deploying locally or on a VPS.

Our goal is to build a secure, isolated, and reproducible environment using Docker. Docker allows you to containerize your services—such as N8N, the PostgreSQL database, and supporting tools—so they remain consistent and easy to manage across different machines or deployment stages.

In this section, we’ll walk through how to:

- Install Docker and Docker Compose on your system

- Create a dedicated working directory for the project

- Lay the groundwork for launching the full AI playground

These steps are simple but crucial. Once completed, your system will be ready to spin up the entire infrastructure with a single command.

- Install Docker and Docker Compose

- sudo apt update && sudo apt install -y docker.io docker-compose curl

- Create a working directory

- mkdir n8n-ai-stack && cd n8n-ai-stack

Docker Compose Configuration

Create the file:

- nano docker-compose.yml

Paste the following content into docker-compose.yml:

version: ‘3.8’

services:

postgres:

image: postgres:15

environment:

POSTGRES_USER: n8n

POSTGRES_PASSWORD: secretpassword

POSTGRES_DB: n8ndb

volumes:

– db_data:/var/lib/postgresql/data

ports:

– “5432:5432”

n8n:

image: n8nio/n8n

ports:

– “5678:5678”

environment:

– DB_TYPE=postgresdb

– DB_POSTGRESDB_HOST=postgres

– DB_POSTGRESDB_PORT=5432

– DB_POSTGRESDB_DATABASE=n8ndb

– DB_POSTGRESDB_USER=n8n

– DB_POSTGRESDB_PASSWORD=secretpassword

– N8N_BASIC_AUTH_ACTIVE=true

– N8N_BASIC_AUTH_USER=admin

– N8N_BASIC_AUTH_PASSWORD=admin123

volumes:

– n8n_data:/home/node/.n8n

depends_on:

– postgres

volumes:

db_data:

n8n_data:

Launch the Stack

With all configurations in place and your services defined in Docker Compose, you’re just one step away from activating your own AI Agent Playground. This is where everything comes together—N8N, PostgreSQL, and your automation logic.

The launch process is simple, but it’s the key moment where your setup becomes a live, working system. Whether you’re deploying on a VPS or locally, starting your containers through Docker ensures your services are isolated, repeatable, and easy to maintain.

To bring your stack online, open your terminal and execute the following command:

- docker-compose up -d

This command tells Docker to pull the necessary images (if not already present), create the containers, and start running them in the background.

Once the containers are initialized, visit your N8N dashboard in your browser:

You’ll be prompted to log in. Use the default credentials:

- Username: admin

- Password: admin123

Once inside, you’ll have full access to the visual workflow editor, ready to start creating AI-driven automations.

? Congratulations — your AI Agent Playground is now fully operational and ready to build intelligent workflows on your own terms!admin / admin123.

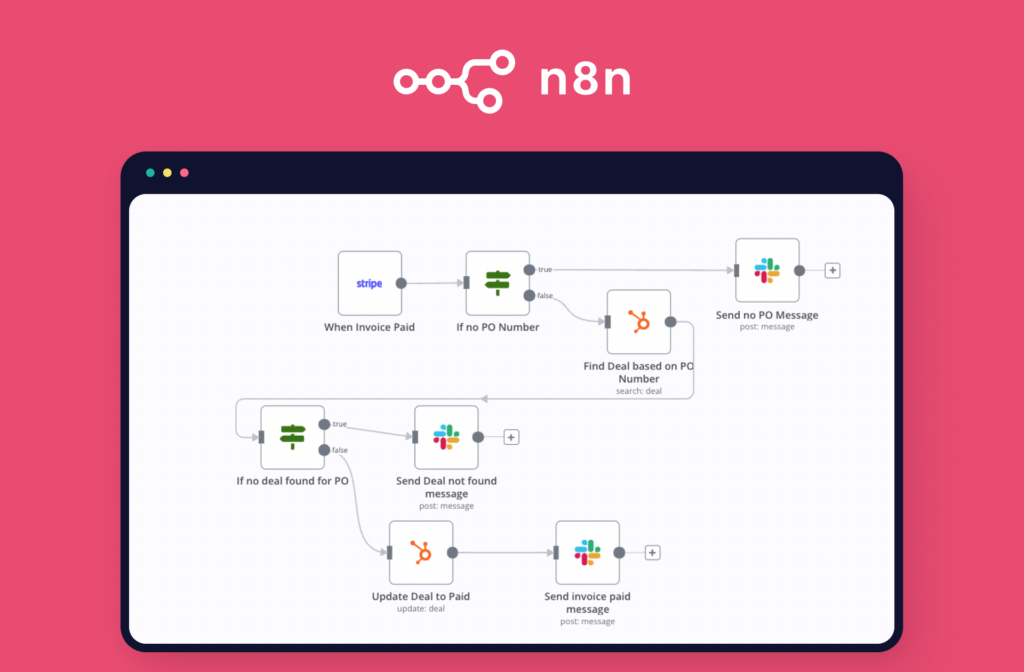

Create Your First AI Workflow

Once your playground is up and running, it’s time to build your first intelligent workflow. This is where the power of visual automation meets the capabilities of language models.

Let’s walk through the process of creating a basic AI agent using N8N:

- Create a New Workflow: Open N8N and click on “New Workflow” to begin.

- Set Up an HTTP Trigger: Add the HTTP Trigger node. This will act as the entry point to your AI agent — allowing external systems to send prompts.

- Connect an LLM Node: Add the OpenAI node (or Ollama if using local models). Pass the request content from the trigger into the prompt field.

- Process the Output: Use a Set node or a Code node to clean, format, or transform the LLM response.

- Store the Conversation (Optional): Connect to your PostgreSQL node to store the interaction, allowing for future retrieval, analytics, or memory chaining.

After deploying the workflow, you can send HTTP requests to your agent, and it will respond intelligently — generating answers, summaries, decisions, or custom outputs based on the input.

This is your foundation for building more advanced agents with memory, logic, and integration into the tools you already use.

Sample Use Cases

Now that your system is up and running, let’s explore how you can put it to real use. The true power of the N8N AI Agent Playground lies in its flexibility and adaptability — you can create agents tailored to your exact needs, whether it’s business automation, content processing, or real-time communication.

Here are just a few examples of what you can build with your new setup:

- Chatbot with memory and logic: Create conversational agents that not only answer questions but also remember previous context, log interactions, and evolve over time.

- Automated document summarizer: Use LLMs to process incoming files (PDFs, emails, scraped text) and generate concise summaries that are saved or sent.

- AI-powered CRM assistant: Automatically draft replies, flag leads, and generate follow-ups based on contact interactions and stored customer data.

- Slack or Telegram bot with AI intelligence: Integrate with messaging platforms to deploy smart bots that can answer questions, schedule tasks, or retrieve knowledge from the database.

- Email parser + text classifier: Build a backend system that reads emails, classifies them, and routes them to appropriate pipelines, with AI-generated summaries or replies.

These are just starting points — once your workflows are running, you can scale, adapt, and connect them with countless tools and APIs. The playground is yours to shape.

Add SSL + Domain (Optional)

If you plan to make your N8N AI Agent Playground accessible over the internet, securing it with HTTPS and a custom domain is essential. Not only does this protect your data, but it also gives your AI assistant a professional interface that users can trust.

Here’s how to set it up:

- Use Nginx as a reverse proxy

- Nginx will forward incoming HTTPS requests to your running N8N instance on port 5678.

- Obtain a free SSL certificate with Let’s Encrypt

- Use Certbot or integrate with Cloudflare DNS to automatically generate and renew certificates.

- You can automate the renewal every 60–90 days to stay secure.

- Configure your domain

- Point your domain (e.g.,

agent.yourdomain.com) to the server’s public IP. - In Nginx, configure a proxy block to map traffic from port 443 (HTTPS) to your internal N8N port 5678.

- Point your domain (e.g.,

This optional but highly recommended setup ensures your AI playground is protected and production-ready. Once complete, you’ll be able to access your agent securely at:

Future Expansions

Once your initial AI agent workflows are up and running, you may want to expand the system’s capabilities even further. The beauty of this modular setup is that it’s designed for continuous evolution — allowing you to add intelligence, scalability, and specialized features as your needs grow.

Here are some powerful extensions you can consider:

- Integrate LangChain workflows: Combine structured agent chains, tools, and memory with N8N to build highly dynamic reasoning systems. LangChain allows you to define agent logic more abstractly while still integrating it into visual workflows.

- Add pgvector for long-term memory: Extend your PostgreSQL database with pgvector to enable semantic search, vector storage, and persistent memory. This is especially useful for retrieval-augmented generation (RAG), knowledge assistants, and personalized agents.

- Build a FastAPI backend for advanced logic: Create REST APIs to extend your N8N agent with custom logic, asynchronous background tasks, or integration endpoints for external systems.

- Connect to Redis for context caching: Add high-speed in-memory caching using Redis to manage user session states, message buffers, or conversational context without overloading your primary database.

These expansions unlock the potential to evolve your AI Agent Playground into a production-grade, context-aware, and enterprise-level solution — ready to power real-world applications across domains.

Conclusion

You’ve just completed a major step toward mastering intelligent automation. By setting up your own N8N AI Agent Playground—with full integration of OpenAI and PostgreSQL—you now have a powerful system capable of processing tasks, generating responses, and managing data, all without writing traditional code.

This isn’t just a prototype or a toy project—it’s a solid foundation for building production-ready AI workflows, scalable assistants, and advanced automations that can adapt to your business, team, or personal goals.

With visual logic, LLMs, persistent memory, and API triggers at your fingertips, you’re in full control of how AI fits into your world.

And this is just the beginning. Continue to experiment, expand, and integrate. The playground is yours.

Looking for more advanced walkthroughs, expert builds, and real-world AI strategies? Visit REVOLD BLOG — your hub for AI innovation and automation mastery.](https://blog.revold.us) for expert-level tutorials on building real AI products.