If you’re looking to install AnythingLLM with Docker, you’re taking a major step toward creating a secure, flexible, and powerful local AI workspace.

AnythingLLM is one of the most innovative and user-friendly platforms for managing local LLMs.n today’s fast-evolving AI landscape, running your own infrastructure is no longer a luxury—it’s a necessity. By self-hosting AnythingLLM, you gain full control over your data, reduce reliance on third-party services, and open the door to extensive customization.

Whether you’re a developer building private chat systems, a data engineer experimenting with document Q&A, or a business leader deploying secure knowledge assistants, AnythingLLM adapts to your goals.

In this educational walkthrough, we’ll cover the full installation of AnythingLLM using Docker, ensuring you walk away with a production-ready AI system that’s self-hosted, secure, and extensible.

Stack Overview

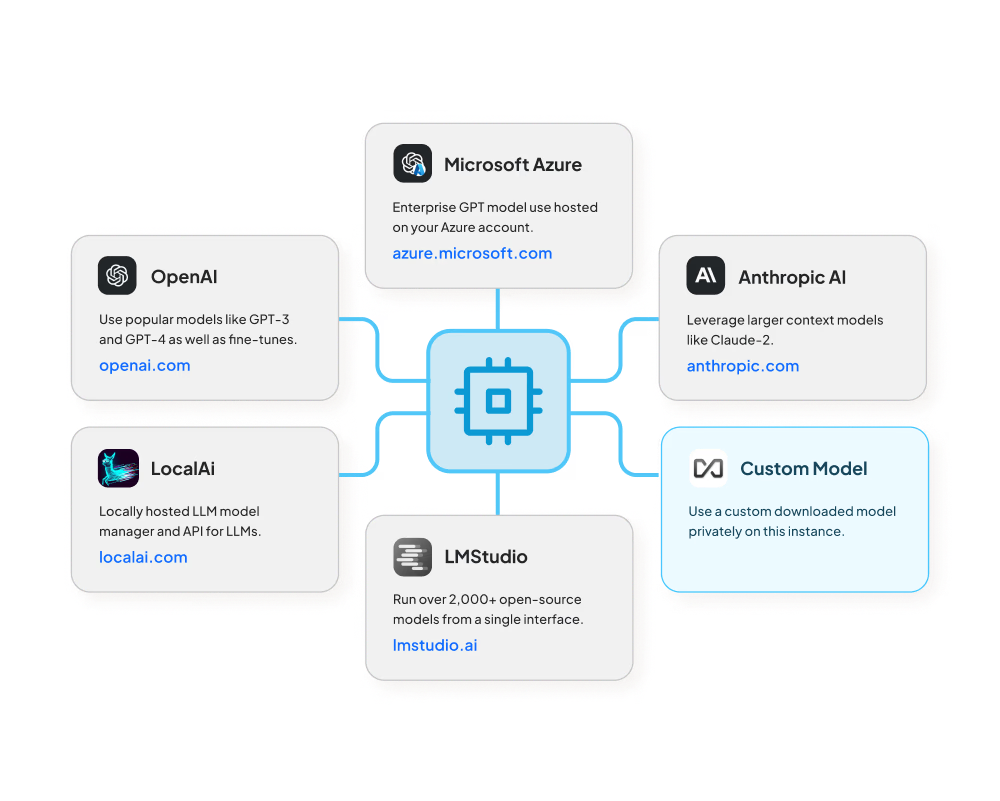

To truly understand the power of AnythingLLM, it’s important to explore the architecture that makes this platform so versatile. AnythingLLM is designed as a modular, self-hosted solution that gives you full control over how language models interact with data, documents, and users.

The stack consists of several core components that work together seamlessly. Each piece plays a specific role—from handling chat interfaces and vector search, to managing permissions and local inference. By leveraging Docker, all of these components are isolated yet orchestrated as a single unified environment.

This modular design ensures you can customize the system for your needs—whether you’re building a private research assistant, internal documentation bot, or multi-user enterprise AI interface.

Here’s what we’ll be installing:

- AnythingLLM Core – AnythingLLM Core – Main application with chat UI and backend logic

- Document Sources – Document Sources – File connectors for local PDFs, markdowns, etc.

- Vector Database (optional) – Vector Database (optional) – PostgreSQL + pgvector or Qdrant

- Local LLMs – Local LLMs – Integration with Ollama for local inference

- Auth & Access – Auth & Access – Admin panel, API tokens, and chat user roles

We’ll run all components inside Docker containers for isolation, reproducibility, and ease of updates.

Preparation

Setting up AnythingLLM isn’t just about pulling containers and running commands—it’s about preparing a strong and reliable foundation that ensures every feature works flawlessly. To help you get the most out of this powerful LLM platform, it’s essential to start with the right tools and environment.

In this section, we’ll walk through everything you need to install and configure before launching AnythingLLM. These tools will allow you to:

- Run and manage Docker containers

- Clone and configure the latest version of the AnythingLLM source code

- Enable development capabilities such as plugin building and UI customizations

- Connect and host local or cloud-based LLMs

- Utilize vector databases for semantic document search and memory storage

Let’s get your system fully equipped and ready for a complete AnythingLLM deployment.

Required Tools

These are essential:

- Git (to clone the repository)

sudo apt install git

- Node.js + npm (for development or local plugin customization)

curl -fsSL https://deb.nodesource.com/setup_18.x | sudo -E bash -sudo apt install -y nodejs

- Ollama (for running local LLMs)

- Follow the official instructions at: https://ollama.com/

- Optional: PostgreSQL + pgvector if you want to bring your own database

sudo apt install postgresql- Set up extensions for vector search using

pgvector

- Optional: Qdrant (if using the built-in vector DB)

- Included in Docker, but can be installed separately if desired

Project Setup

Once all essential tools are installed, it’s time to organize your workspace and prepare the project files. This ensures that your AnythingLLM instance is set up in a clean, modular environment and is easy to manage or move across systems.

Follow these steps carefully to structure the project directory and pull in the application source:

- Docker + Docker Compose

Run:sudo apt update && sudo apt install -y docker.io docker-compose curl

- Create working directory

mkdir anythingllm && cd anythingllm

- Clone the repository

git clone https://github.com/Mintplex-Labs/anything-llm .

- Copy .env.example to .env

cp .env.example .env

Edit .env to define ports, LLM provider, database config, and vector options.

Docker Compose Configuration

AnythingLLM comes with a prebuilt docker-compose.yml that sets up all essential services. This YAML file defines how each container—such as the application backend, vector database (Qdrant), and optional Nginx proxy—interacts with the system.

You can start with the default configuration, but it’s often a good idea to customize resource limits, ports, volumes, and environment variables to match your deployment style (local or production).

To start the stack, run:

docker-compose up -d

This will automatically pull the necessary images, build the containers, and launch the application.

The Docker stack includes:

- AnythingLLM core service (Node.js app + chat interface)

- Optional Qdrant service for vector embeddings

- Nginx reverse proxy (if you’re using a domain + SSL).

Environment Configuration

The .env file is your main configuration control center. It allows you to easily change how AnythingLLM behaves without modifying code or rebuilding containers.

You should review and adjust key values depending on your goals. Here are a few important ones:

LLM_PROVIDER=ollamaoropenai— Choose your inference backendVECTOR_DB=qdrantorpgvector— Define the semantic memory engineALLOW_FILE_UPLOADS=true— Enable direct file ingestion in the UIPORT=3001— Set the listening port for the app

Advanced fields include:

- Authentication: enable tokens or login sessions

- Upload limits: restrict file size or formats

- Workspace defaults: configure onboarding flows or multi-user access.

Launch the System

Once your environment variables are in place and your Docker Compose file is ready, you’re just one step away from running your AI system.

Launch the stack with:

docker-compose up -d

This will start all services in detached mode.

After a few seconds, open your browser and visit:

http://localhost:3001

There, you’ll be greeted by the AnythingLLM dashboard. Use the default credentials to log in:

- Username:

admin - Password:

admin123

On your first login, you’ll be prompted to:

- Create an admin account

- Set a workspace name

- Connect your preferred LLM provider

- Optionally upload initial documents

That’s it! You now have a powerful AI workspace ready to serve local language model interactions, document Q&A, and intelligent workflow management.

Extend and Customize

Once you have the core system running, AnythingLLM can grow with your needs. Its modular design means you can expand its functionality to fit almost any AI workflow scenario. Here are some ways to take your setup to the next level:

- Integrate LangChain or Hugging Face Transformers: Add advanced embeddings, agent chains, and memory components for more sophisticated language interactions.

- Run advanced LLMs locally via Ollama: Use models like LLaMA, Mistral, or CodeLlama entirely offline for better performance and privacy.

- Set up secure reverse proxy with SSL: Use Nginx and Let’s Encrypt to enable HTTPS access to your platform and enforce secure authentication.

- Support additional file formats: Extend document processing by adding support for DOCX, HTML, CSV, and custom scripts.

- Connect external APIs or tools: Automate workflows with tools like Zapier, Notion, Airtable, or even custom REST services.

With AnythingLLM, you’re not limited by the UI—you can customize, scale, and enhance as much as needed.

Conclusion

Congratulations! You’ve just set up a full-featured, private AI assistant stack using AnythingLLM. What you now have is more than just a tool—it’s a foundation for an entire ecosystem of AI automation, document intelligence, and secure language modeling.

AnythingLLM empowers users to:

- Run open and commercial models locally (like Ollama)

- Connect vector databases for retrieval-based chat

- Maintain full control over all files, logs, and usage data

- Create intelligent agents that interact with documents and APIs

By using Docker, your stack is easy to maintain, portable across environments, and simple to scale or replicate. Whether you’re launching it for yourself, your team, or your customers—you’re building on solid infrastructure.

Ready to go further? Explore more tutorials, integrations, and advanced setups at REVOLD BLOG—your trusted space for AI system design and innovation.