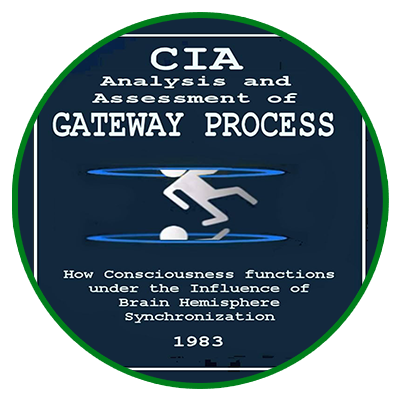

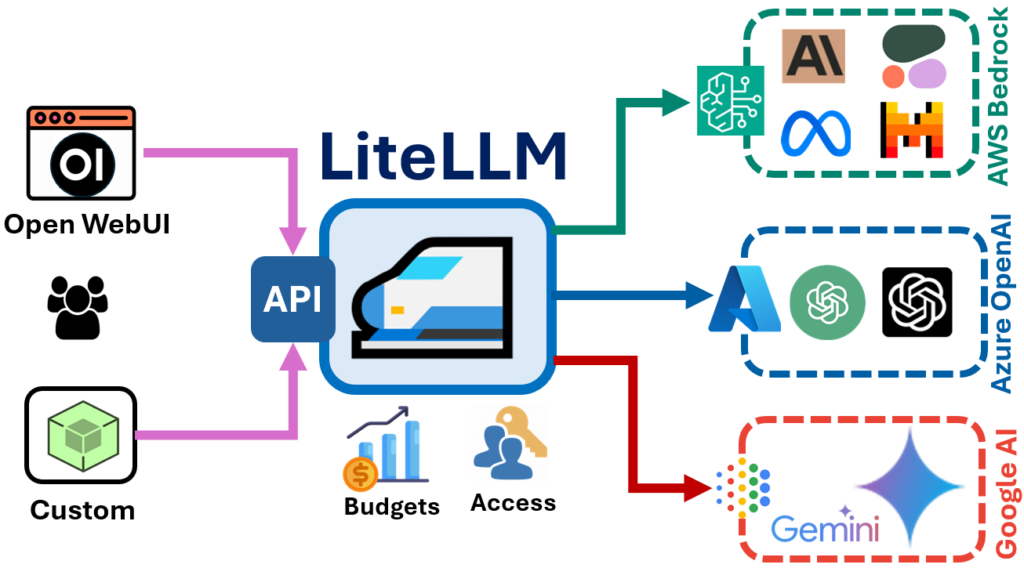

In this comprehensive guide, we’ll walk you through setting up LiteLLM — a lightweight proxy server for large language model APIs such as OpenAI, Ollama, and others — using Docker. You’ll learn how to deploy LiteLLM with Docker Compose, connect PostgreSQL with pgvector support, configure API keys, set up the config.yaml, and manage the Llama 3 model from Ollama. This guide is perfect for developers, students, and AI enthusiasts looking to build their own LLM infrastructure.

The rapid evolution of artificial intelligence and next-generation language models requires a robust, flexible, and secure infrastructure for deployment and usage. More teams, companies, and independent developers are turning to self-hosted solutions to gain full control over data, performance, and costs. One of the standout solutions is LiteLLM — a lightweight yet powerful proxy server that unifies access to various LLMs through a single API.

When paired with Docker, LiteLLM becomes a deploy-in-minutes tool, even for those without deep DevOps experience. With support for PostgreSQL + pgvector and local models like Ollama Llama 3, you get a complete AI infrastructure ready to be integrated into apps and business workflows. This is especially relevant for developers, researchers, students, and enthusiasts seeking autonomy, privacy, and scalability.

In this blog, we’ll go step-by-step through deploying LiteLLM with Docker, connecting local models, configuring a database, and using the system in real-world tasks — from experiments to production.

Current Trends and Research

- Growing popularity of Ollama and locally-run models

- Integration of LLMs with vector databases (RAG pipeline)

- Shift to self-hosted setups for enhanced data privacy

- Use of Docker and Kubernetes for scalable AI infrastructure

- Open-source LLM proxies like LiteLLM as alternatives to commercial APIs

Technical Setup

Project Structure

mkdir lighllm

cd lightllm

Create 3 files: .env, config.yaml, docker-compose.yml

.env File

LITELLM_MASTER_KEY=”sk-add_any_numbers”

LITELLM_SALT_KEY=”sk-add_any_numbers”

DATABASE_URL=”postgresql://user:password@host:port/dbname”

PORT=4000

STORE_MODEL_IN_DB=”True”

OPENAI_API_KEY=”sk-YOUR_OPENAI_KEY_HERE”

config.yaml File

model_list:

- model_name: llama3 litellm_params: model: ollama/llama3 api_base: http://YourLocalIP:11434

litellm_settings: set_verbose: true drop_params: true

docker-compose.yml File

version: ‘3.8’

services: litellm: image: ghcr.io/berriai/litellm:main-latest container_name: litellm ports: – “4000:4000” environment: – LITELLM_CONFIG_PATH=/app/config.yaml – LITELLM_API_KEYS={“admin”:{“api_key”:”Apollo7337!+”,”permissions”:[“all”]}} – LITELLM_MASTER_KEY=sk-1234 – LITELLM_SALT_KEY=sk-Apollo7337SaltedKey – DATABASE_URL=postgresql://revold:Apollo7337!+@pgvector:5432/RevoldAI_DB – STORE_MODEL_IN_DB=True volumes: – /home/roman/lighllm/config.yaml:/app/config.yaml depends_on: – pgvector networks: – revold_network restart: unless-stopped

pgvector: image: ankane/pgvector container_name: pgvector environment: POSTGRES_USER: Your User Here POSTGRES_PASSWORD: Your Pass Here POSTGRES_DB: Your DB name Here ports: – “5432:5432” volumes: – pgdata:/var/lib/postgresql/data networks: – revold_network restart: unless-stopped

networks: revold_network: driver: bridge

volumes: pgdata:

Use Cases and Applications

LiteLLM with Docker offers flexibility and versatility, making it ideal for a wide range of scenarios. Here are the main use cases and real-world examples:

- ? Create your own API Gateway — unify access to multiple LLM providers (OpenAI, Ollama, Cohere, etc.) with centralized key management and logging.

- ?️ Internal team access — provide secure, permission-controlled access to models for your team with audit logging.

- ? Web app integration — use LiteLLM as the backend for chatbots, AI assistants, or search apps with custom model support.

- ? RAG and semantic search — combine LiteLLM with pgvector to implement Retrieval-Augmented Generation and search over documents like PDFs.

- ? Education and training labs — build hands-on sandboxes and simulators for AI students with real-time feedback.

- ? Research and experimentation — test non-standard models, compare configurations, and benchmark performance at scale.

Ethical and Social Considerations

Using self-hosted solutions like LiteLLM isn’t just a technical decision—it’s an ethical one. Here are the main dimensions:

- ? Data Privacy and Control — hosting your own models means your user and business data never leave your infrastructure, reducing risk of leaks or misuse.

- ? Transparency and Interpretability — open configurations allow better understanding of how models generate outputs.

- ? Democratizing AI — open-source tools bring powerful AI to small teams, researchers, and communities without relying on expensive APIs.

- ?️ Independence from Big Tech — self-hosted solutions empower organizations to operate without vendor lock-in or centralized policy restrictions.

- ? Inclusion and Accessibility — the ability to localize and customize makes these tools ideal for deploying AI in education, healthcare, and public services in underserved regions.

The Future of AI and LLMs

The future of artificial intelligence and language models is evolving rapidly. Key trends shaping the field include:

- ? Mass adoption of self-hosted LLMs — companies are shifting to local deployments to lower costs and enhance privacy.

- ? Proxy platforms becoming the norm — solutions like LiteLLM unify APIs, models, and access in a scalable and secure interface.

- ? Rise of Multi-Agent Systems — LLMs increasingly serve as intelligent agents interacting with other systems and APIs.

- ? Advances in vector databases and RAG — tools like pgvector, Milvus, and Weaviate enhance contextual retrieval and document understanding.

- ? Growth of offline and private assistants — fully localized AI agents with custom skills and offline support are gaining traction.

Who Is It For?

LiteLLM with Docker and local models unlocks opportunities for various types of users—from professional developers to learners and hobbyists.

?? Developers & Engineers:

- Rapid API deployment for LLMs

- Multi-model and multi-provider support

- Integration into CI/CD and MLOps

- Enhanced security and architectural control

? Students & Learners:

- Hands-on experience with LLM architecture

- Building labs and educational prototypes

- Working with real-world datasets and prompts

- Preparing for careers in AI, NLP, and systems engineering

? Enthusiasts & Hobbyists:

- Simple setup and full customization

- Experimenting with local AI assistants

- Total autonomy from cloud dependencies

- Building personal AI tools, chatbots, and agents

REVOLD BLOG by AIR RISE INC

REVOLD BLOG is an expert-driven platform created by the team at AIR RISE INC to share actionable knowledge and practical tools in AI, blockchain, data science, and automation. We focus on self-hosted solutions, open-source integration, and building robust AI infrastructures.

On our blog, you’ll find:

- Step-by-step guides for deploying modern LLM and AI systems

- Reviews of frameworks and libraries with usage examples

- Analysis based on real-world case studies

- Insights for developers, analysts, and technical leaders

We believe in open knowledge, technological autonomy, and ethical innovation. REVOLD BLOG is more than a resource — it’s a part of an ecosystem advancing responsible and scalable AI for the future.